|

|||

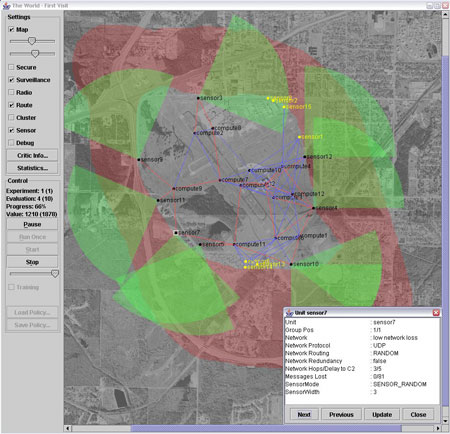

Towards Self-Managing Systemsby Joakim Eriksson, Niclas Finne and Sverker Janson Scientists at SICS are addressing the problem of ensuring dependability and performance of networked systems in the face of uncertain, and often largely unknown, environments. In a first experiment, they compute automatic configuration rules for sensor networks, maximising sensor coverage and minimising radio communication and network latency. As computing systems become more complex, management problems and costs tend to increase. IBM’s Autonomic Computing initiative proposes to address this challenge by making computing systems more self-managing: self-configuring, self-healing, self-optimising and self-protecting, from individual components to complete environments. An increasingly popular approach to self-management is to apply concepts and techniques from automatic control. Traditional linear control methods are well known, well understood, easy to implement and have a wide range of applications. A simple illustrative example is regulating the CPU load and memory use of a Web server using MIMO linear control. Controlling an entire networked system is much harder. The system components all have local observation and control points and an incomplete knowledge of the system state. The effect of control is uncertain, interacts with that of other components, and can be much delayed. Optimal decentralised control is known to be computationally intractable, but this is essentially the problem facing system managers and self-management mechanisms. Sensor network management is a case in point. The advent of low-cost sensor units (sound, light, vibration, temperature etc), which include limited but useful computing and wireless communication support, enables high-resolution monitoring by quickly deployed and cost-efficient sensor networks. Sensor units are distributed over an area, forming a wireless network, and data is collected and routed to users tapping into the network. The technology has a wide range of potential scientific, civilian, and military applications. Ideally, sensor networks should be plug-and-play. Following deployment, the sensor units should automatically configure themselves for the best possible performance based on locally available information, eg on the perceived local density of sensors and on network throughput. If units then malfunction or are moved for example, or if changes in the environment or power supply alter the connectivity, the network should automatically reconfigure. We are investigating methods for computing task-specific self-configuration policies that can be downloaded to sensor units prior to (or during) a mission. A policy is a set of rules that assigns configuration actions to sensor unit states. Self-configuration is achieved as the sensor unit repeatedly checks if the current state matches any rule, and if so performs the corresponding configuration action. Such policy execution can be done efficiently with the limited computing resources of the sensor unit. Figure 1 shows the simulation and optimisation tool that has been developed. The scenario illustrated is to monitor the perimeter security zone of an airport using a combination of sensor units and more powerful link and fusion nodes connected by a wireless network. The information is collected to the command and control centre.

Each sensor unit has limited local awareness of its number of neighbours, routing choices and the degree of network congestion. Based on this information, the sensor units and link and fusion nodes automatically configure scan mode, routing, fusion etc, for the best-expected sensor network performance. Our solution uses a Monte Carlo policy evaluation and value-function-based policy refinement method, an instance of the large family of approximate dynamic programming methods. Dynamic programming guarantees convergence to optimality for centralised control when the state is fully observable. Approximate dynamic programming offers tools for larger and more complex control problems, in many cases offering theoretical guarantees. However, in the case of partial observability and decentralised control, fewer guarantees are available or even possible. On the research frontier, tractable special cases are being identified. In our future work, we aim to extend the model with policy-controlled intra-network communication for increasing and optimising system self-awareness. We will also carry out experiments using specific hardware units (eg ESB 430/1), physical environments and missions. Links: Please contact: |

|||